Abstract

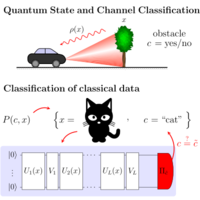

Quantum classification and hypothesis testing (state and channel discrimination) are two tightly related subjects, the main difference being that the former is data driven: how to assign to quantum states the corresponding class (or hypothesis) is learnt from examples during training, where can be either tunable experimental parameters or classical data “embedded” into quantum states. Does the model generalize? This is the main question in any data-driven strategy, namely the ability to predict the correct class even of previously unseen states. Here we establish a link between quantum classification and quantum information theory, by showing that the accuracy and generalization capability of quantum classifiers depend on the (Rényi) mutual information and between the quantum state space and the classical parameter space or class space . Based on the above characterization, we then show how different properties of affect classification accuracy and generalization, such as the dimension of the Hilbert space, the amount of noise, and the amount of neglected information from via, e.g., pooling layers. Moreover, we introduce a quantum version of the information bottleneck principle that allows us to explore the various trade-offs between accuracy and generalization. Finally, in order to check our theoretical predictions, we study the classification of the quantum phases of an Ising spin chain, and we propose the variational quantum information bottleneck method to optimize quantum embeddings of classical data to favor generalization.

1 More- Received 4 March 2021

- Accepted 30 September 2021

DOI:https://doi.org/10.1103/PRXQuantum.2.040321

Published by the American Physical Society under the terms of the Creative Commons Attribution 4.0 International license. Further distribution of this work must maintain attribution to the author(s) and the published article's title, journal citation, and DOI.

Published by the American Physical Society

Physics Subject Headings (PhySH)

Popular Summary

Data-driven strategies are responsible for the tremendous advances in many fields such as pattern recognition, where the classification algorithm is directly ”learnt” from data rather than being built via human-designed mathematical models. Quantum machine learning is a rapidly evolving new discipline that applies data-driven strategies to the quantum realm, where faster algorithms and more accurate estimation methods are theoretically available. Nonetheless, much theoretical work is still needed to understand how to fully exploit genuinely quantum features, such as entanglement, the no-cloning theorem, and the destructive role of quantum measurements, for machine learning applications.

Here we use tools from quantum information theory to study the problem of generalization in quantum data classification, where the data are either already given in a quantum form or first embedded onto quantum states. We show that after ”training” a quantum classifier using a few examples, the classification error that we get with new, previously unseen data can be bounded by entropic quantities, more specifically by the mutual information between the quantum state space and other classical spaces. Loosely speaking, our theorem shows that a quantum classifier generalizes well when it is capable of discarding as much as possible of the information that is unnecessary to predict the class.

We apply our general results to study fundamental properties, e.g., introducing a quantum version of the bias-variance trade-off, and to derive new algorithms, such as what we call the variational quantum information bottleneck principle. Our predictions are then tested in numerical experiments.